Summer 2020

Team members: Sebastian Bruno, Veronica Chen, Alice Huang, Melanie King, Michelle Tong, Josh Vogel

In partnership with Smithsonian Institute Exhibits

Animation code development by Veronica Chen

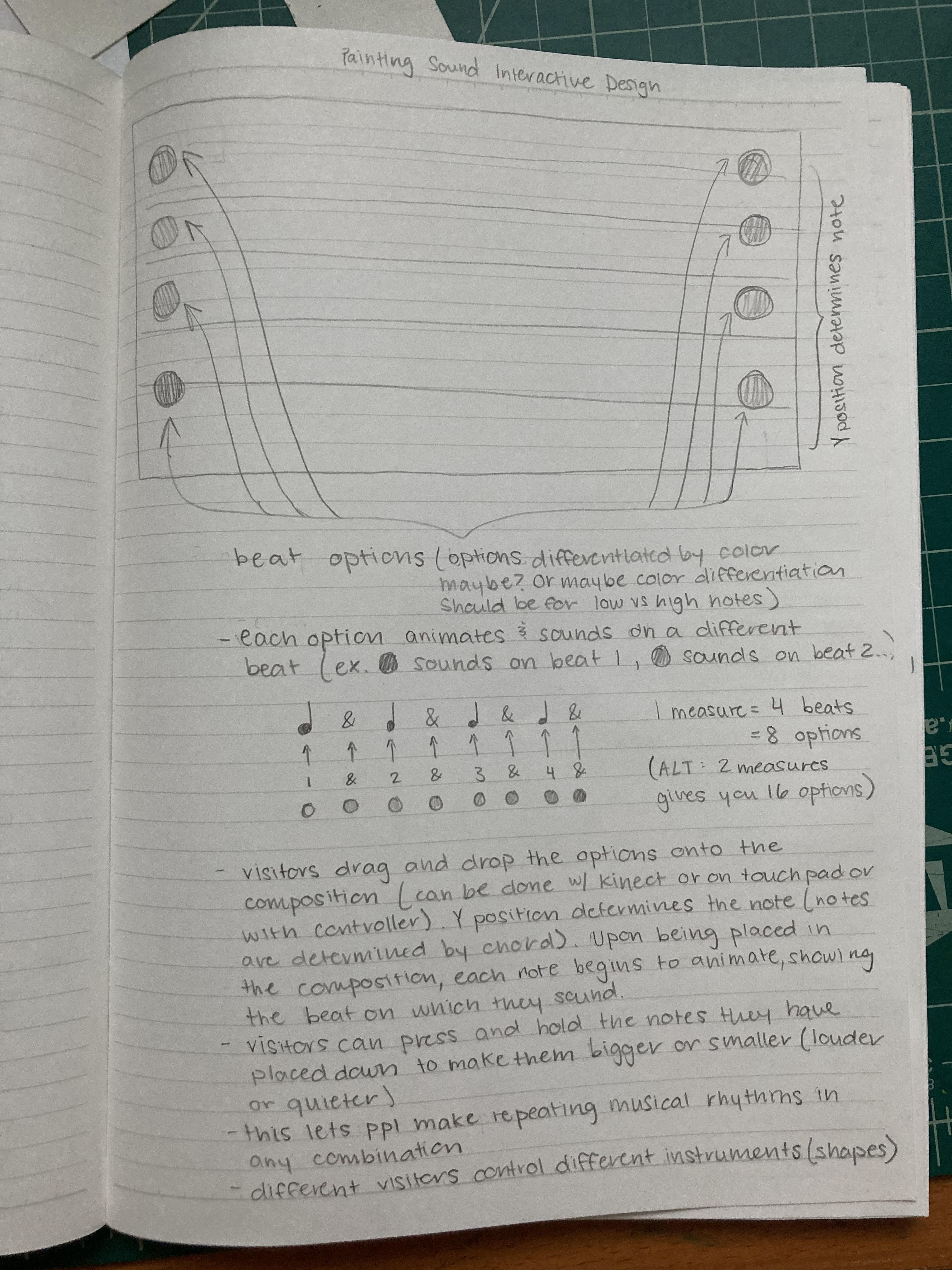

Our goal is to provide people who are d/Deaf or hard of hearing with an interactive audio-visual composition-making experience that produces a specific emotional response associated with a certain type of music. Using motion-sensing technology, visitors can gesture in front of a display to create a visual and auditory output, which is tailored to the specific emotion that the visitor would like to portray. Haptic feedback transmitted via a touchpad provides another layer of musical experience.

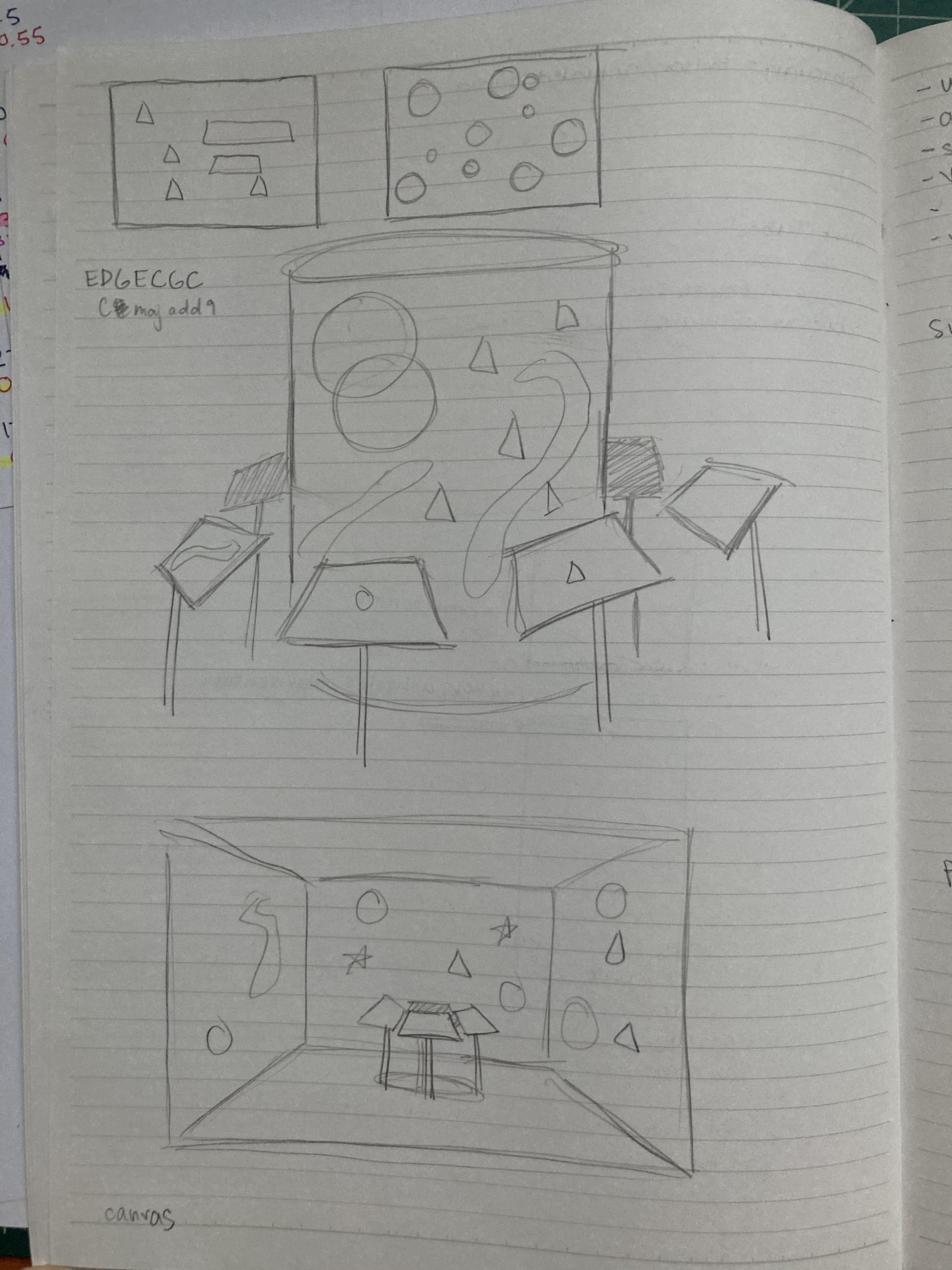

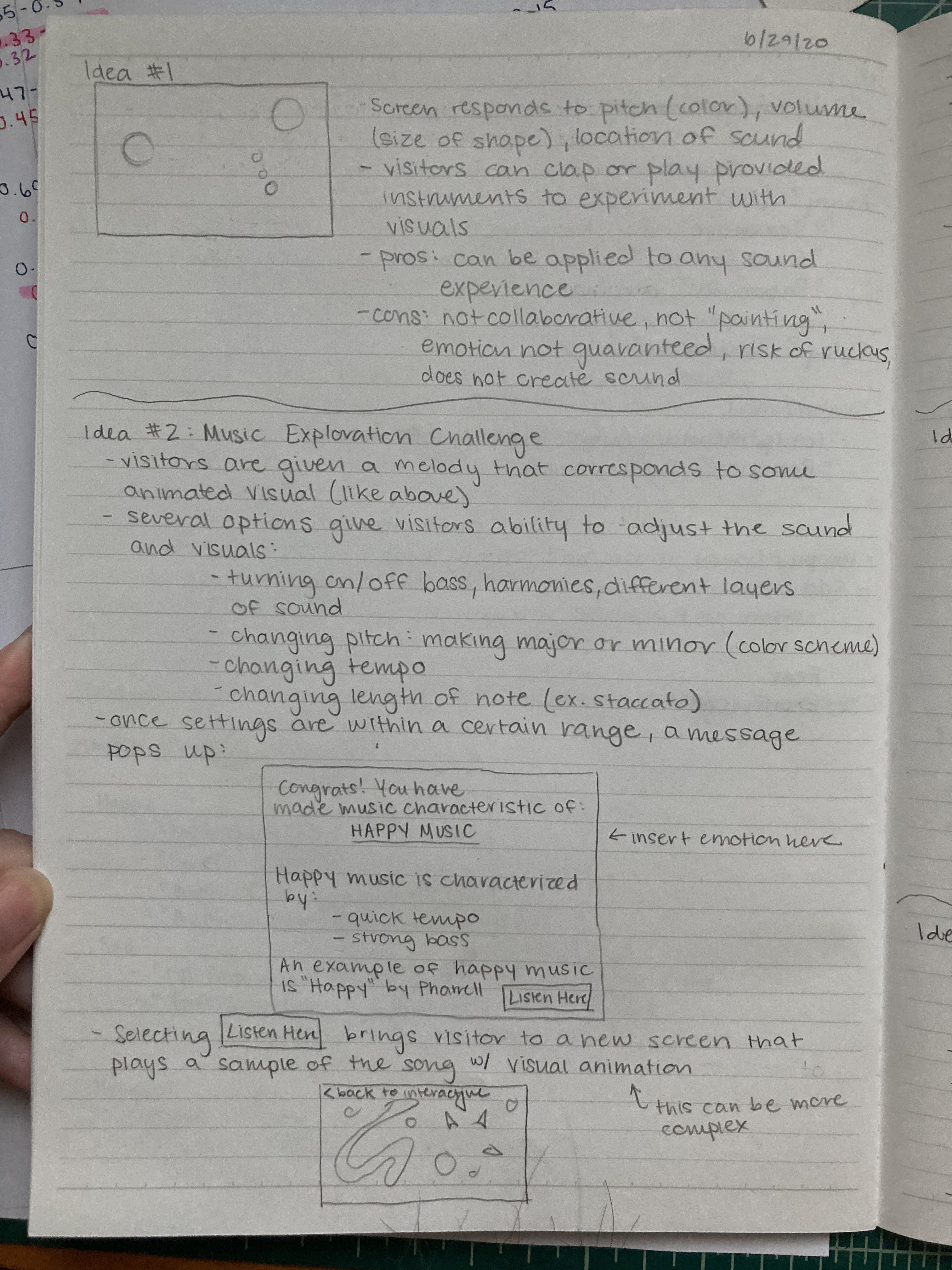

Concept Ideation by Veronica Chen

Problem:

We were asked to think about how we can make musical exhibits at the Smithsonian accessible for visitors who are d/Deaf or hard of hearing; we have investigated how to “create an interactive experience that demonstrates how music can convey an emotion that is accessible to visitors who are D/deaf or hard of hearing”.

Solution:

In order to fulfill this objective, we have developed the concept of an immersive and interactive experience that allows visitors to explore the relationship between emotion and music. Our solution is two-fold: educational, providing information about the components of music that affect the perceived emotion in music, and interactive, by allowing visitors to explore the combinations of these components in a hands-on portion of the exhibit. Through the inclusion of visual, auditory, and tactile components in the interactive portion, the exhibit creates its own language for conveying emotion to the visitor.

Using a transducer, haptic feedback mimics heartbeat patterns in order to reflect the emotions conveyed through the audio visual display and provoke a deeper emotional reaction in the user. For example, "happy" beats at a quick 110 bpm, "calm" at a slow 50 bpm, and "fearful" at 60 bpm with syncopation. Shown to the right are the haptic patterns corresponding to various emotions, developed by Josh Vogel and Melanie King.

(above: final gesture-based audio-visual code by Michelle Tong and Alice Huang. Each mode corresponds to a unique set of colors, chords, and instruments that represents a certain emotions. Demonstrated above are happy, sad, fearful, angry, excited, and calm.)